Editor’s note: Jon Fisher is currently a conservation science officer at The Pew Charitable Trusts where he provides scientific expertise to inform and improve research projects and helps to increase the impact of scientific research. He was formerly a senior conservation scientist at The Nature Conservancy where he led and conducted research as a principal investigator and conducted internal theory of change work. He and co-authors recently published a paper “Improving scientific impact: how to practice science that influences environmental policy and management” in the journal Conservation Science and Practice. Fisher presented a webinar on this research to the OCTO networks (including the EBM Tools Network) in December 2019, and we highly recommend reading the paper and watching the webinar recording.

Skimmer: As you describe in your paper, a lot of scientific research that is intended to be applied isn’t ever used – because decision-makers are unaware of it, aren’t able to access it, don’t understand it, or don’t see it as relevant. Your recent paper outlines practical steps for improving the impact of science on decision making. Could you give us a summary of those steps?

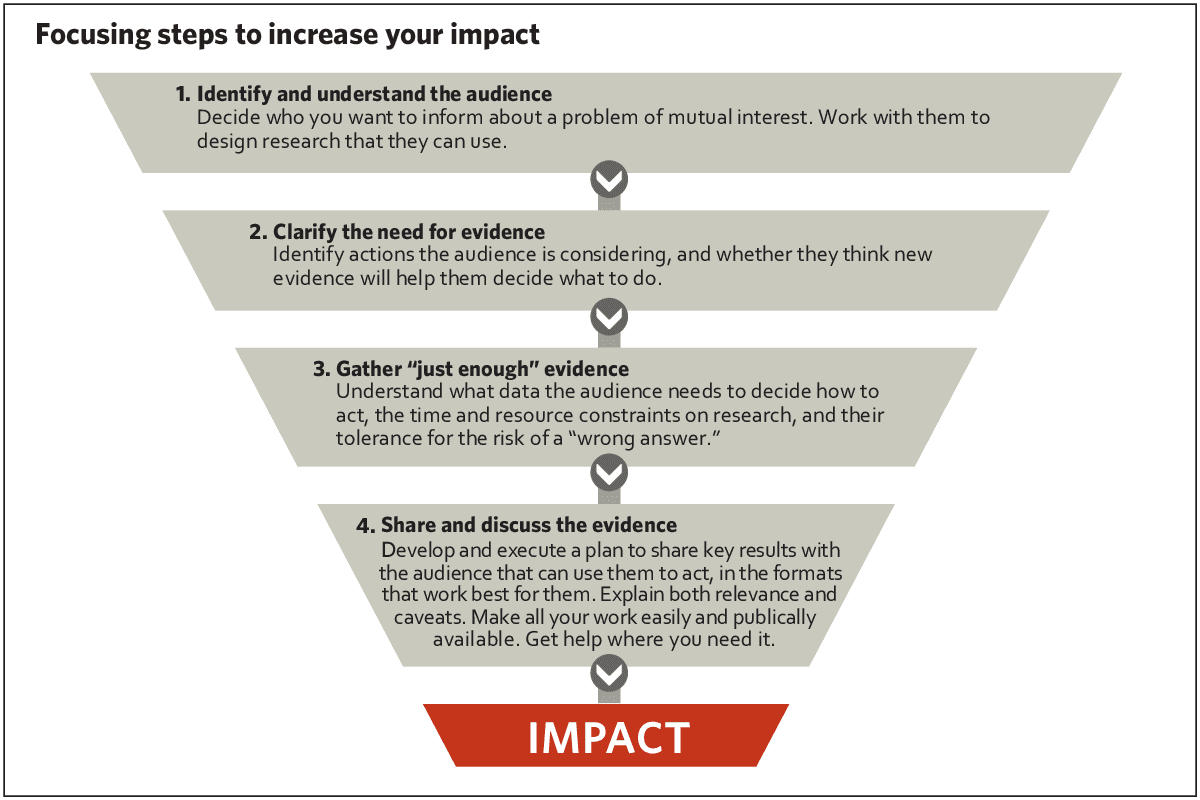

Fisher: Sure, at a high level we recommend four steps:

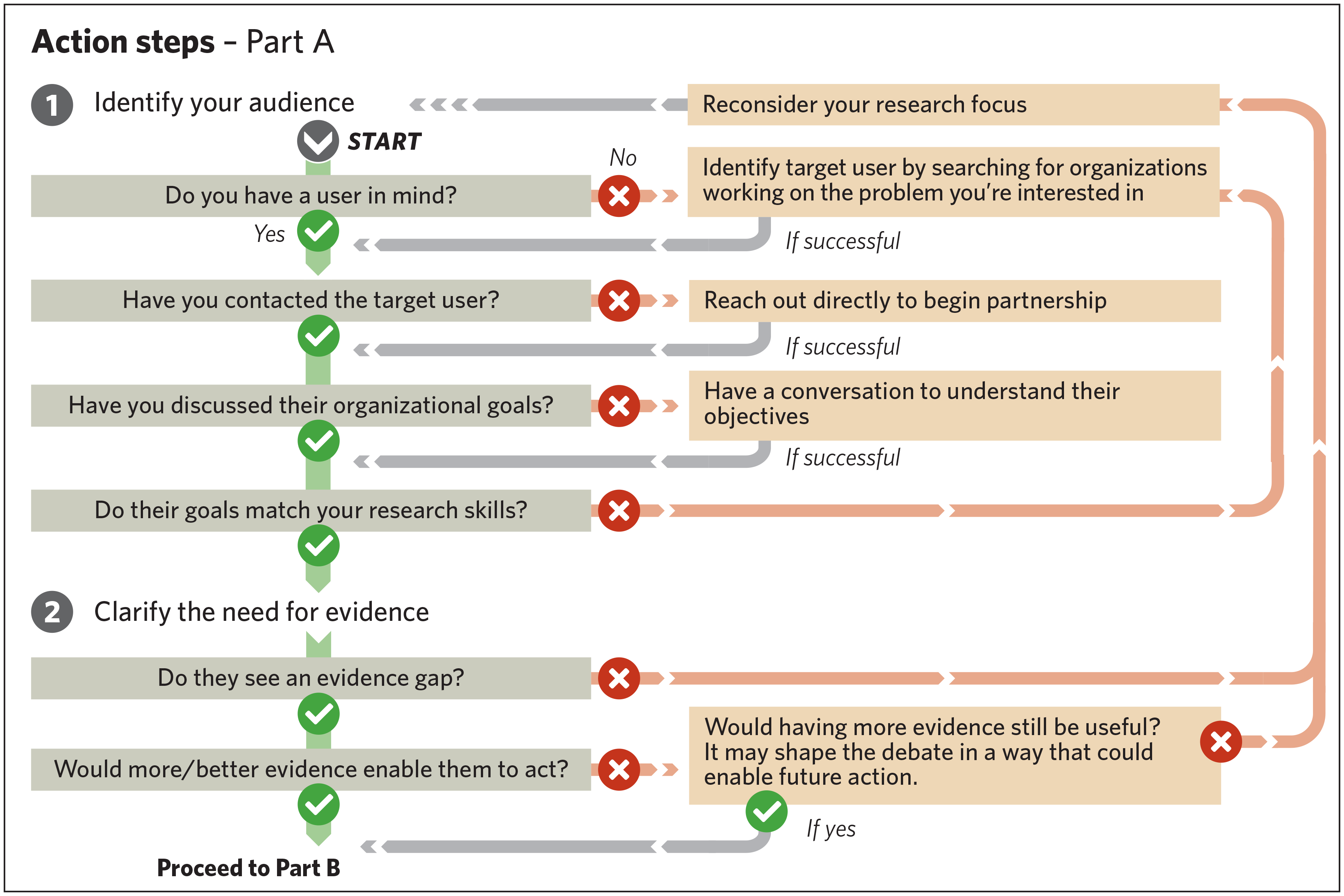

- Identify and understand the audience (e.g., a decision-maker with whom you can partner)

- Clarify the need for evidence (i.e., how new information could lead to action)

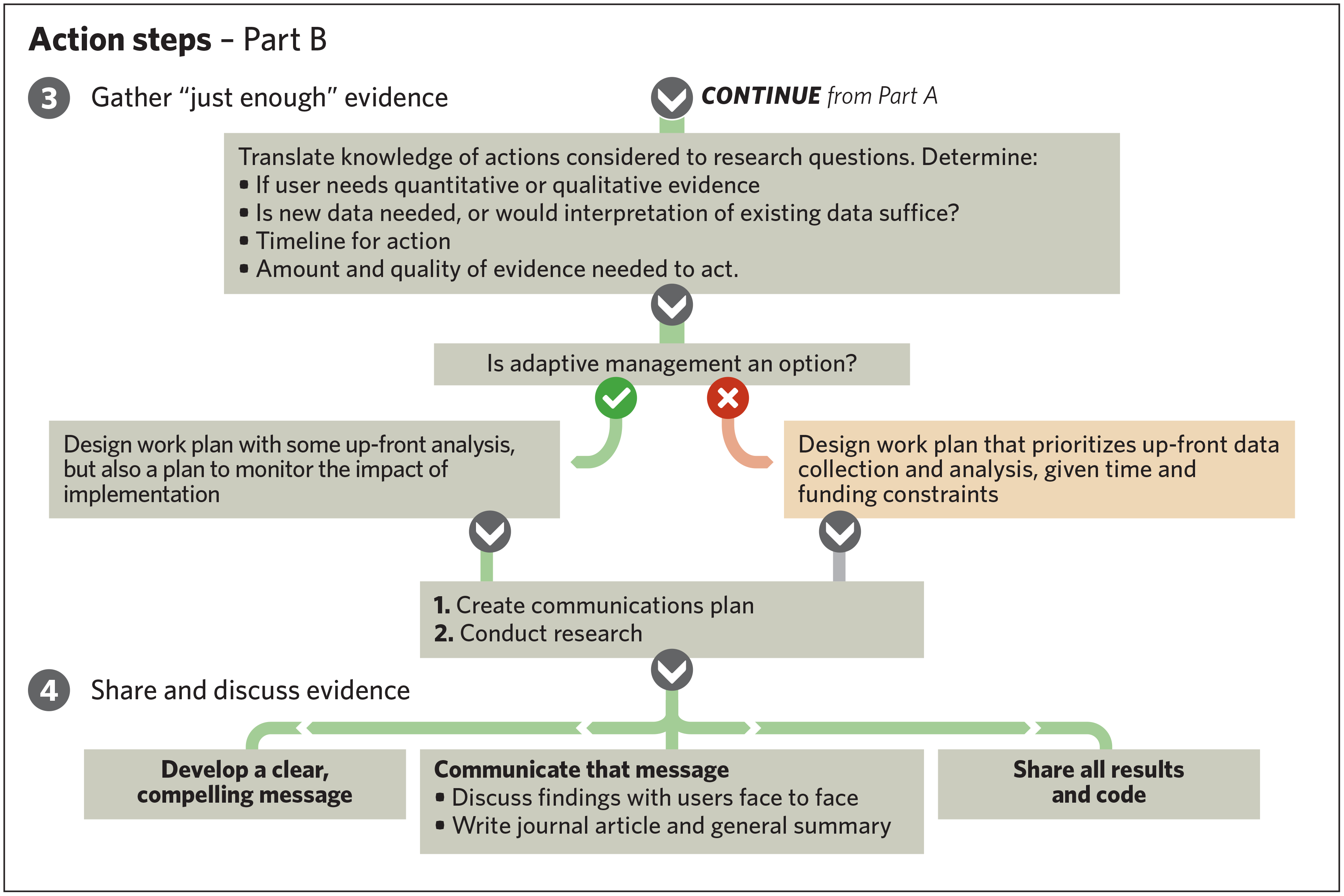

- Gather "just enough" evidence (i.e., so there is enough rigor to be credible without missing key decision-making deadlines or wasting resources on gathering extraneous information)

- Share and discuss the evidence (i.e., help people learn about your results and motivate them to act on them).

These are guidelines rather than a strict recipe for success because there are many factors that determine the impact that research has. But following these steps improves the odds of research being influential. In fact, we ourselves have found that not following these steps in past projects has led to disappointing outcomes.

Skimmer: You and your co-authors conducted this research in a very open, collaborative way – soliciting peer review on drafts and even presenting this research on one of OCTO’s webinars. You have said that this feedback changed your thinking in some ways. Can you tell us more about this?

Fisher: Yes, this has been quite a journey! The idea for developing these guidelines began in a subgroup discussion from a Science for Nature and People Partnership soil carbon group about 2.5 years ago. We spoke to experts in the field to ask their advice on what they thought scientists needed to be doing to have their science influence policy and management. We also talked to colleagues who were wishing for a guide like this to see what they needed to be doing. From these conversations we came up with an early outline. As we heard different perspectives, our understanding of what would be most useful shifted a lot. We wrote an early draft of a manuscript and got input from ~20 people, including non-scientists, to get their input and check for clarity.

At that point (after ~1.5 years ago) we had a paper we were happy with, but we didn’t know whether the feedback we got was representative of the broader conservation science community. So, we uploaded a preprint to EcoEvoRxiv in March 2019, something none of us had ever done before, and asked readers to send us more input. Every time we revised the paper, we updated the preprint. We also started giving small talks based on the paper in May 2019. The OCTO webinar in December 2019 was the first time we shared it with a big audience.

It was validating to see how much hunger there was for guidelines like this, and this process helped us see which parts of the paper were working well. At the same time, we got critiques that forced us to continually reevaluate our beliefs and assumptions. So, while it was humbling and required lots of patience, it was overall a phenomenal experience. Between talks and sharing the preprint, we reached well over a thousand people before the paper was even accepted!

Skimmer: One of the recommendations that really struck me was about gathering “just enough evidence”. Can you tell us more about how a scientist figures out what “just enough evidence” is and what some of the pitfalls of gathering too much evidence are?

Fisher: This concept was what got us interested in this topic in the first place. There is a field called “value of information” which studies this, but this field is typically pretty mathematical and abstract. As scientists, we often think about the knowledge we want to generate rather than the decision we need to inform. To be relevant to decision making, we may need less information than we think, and we probably need it faster than we would like.

One example was a project I worked on in Brazil. The idea was to use natural processes to help reduce erosion and associated sediment loading and thus bring down water treatment costs. We did some exciting research using very high-resolution satellite imagery, predicting future land use change, hydrological modeling, and economic analysis. We were proud that we could rigorously show the water treatment company how many years it would take for them to recoup their investment in conservation. But it turns out that they decided to move ahead with the work before we even completed our modeling, so they did not need nearly as much detail as we  thought. The Nature Conservancy spent roughly the same amount of money on the research as we did paying landowners to make changes on the ground that would improve water quality! When we quickly repeated the analysis using coarser (and free) satellite imagery, we found we probably could have saved tens of thousands of dollars, which could then have been spent on more conservation to improve water quality. So “too much” research has real costs in conservation. But it takes careful discussion and planning to get it just right.

thought. The Nature Conservancy spent roughly the same amount of money on the research as we did paying landowners to make changes on the ground that would improve water quality! When we quickly repeated the analysis using coarser (and free) satellite imagery, we found we probably could have saved tens of thousands of dollars, which could then have been spent on more conservation to improve water quality. So “too much” research has real costs in conservation. But it takes careful discussion and planning to get it just right.

Skimmer: Was there anything that really surprised you from your research or anything that you found counterintuitive?

Fisher: Our biggest surprise was how hard it was to follow our own advice at times! We laughed about this a lot when writing the paper, but it also helped us to improve it. For example, early on we realized we were still a bit muddy on who our audience was and how our paper could empower them to make change. There were several points like this where we needed help from outside our group: other conservation scientists, policy experts, communications professionals, etc. But while it was hard to figure all of this out, we were surprised and pleased by how much better the paper got as a result. Another surprise was that we heard very strong demand for a paper like this, but the people we spoke to with the most expertise in the field (including the editor and reviewers at the first journal we submitted to) didn’t see it as novel.  Essentially, we worked to produce a stand-alone publication that makes research impact advice accessible to a broad range of scientists. Most of our recommendations have already been published elsewhere, but we have found a very low level of awareness of those recommendations in our target audience. It is ironic that advice for planning effective communications has not been well communicated to scientists.

Essentially, we worked to produce a stand-alone publication that makes research impact advice accessible to a broad range of scientists. Most of our recommendations have already been published elsewhere, but we have found a very low level of awareness of those recommendations in our target audience. It is ironic that advice for planning effective communications has not been well communicated to scientists.

All figures from Fisher, JRB, Wood, SA, Bradford, MA, Kelsey, TR. Improving scientific impact: How to practice science that influences environmental policy and management. Conservation Science and Practice. 2020; 2:e0210. https://doi.org/10.1111/csp2.210